Infosystems Jet and processing 30 % of support requests using YandexGPT

In one month, the company developed a service center automation system based on YandexGPT.

Background

Jet Infosystems

In one month, Jet Infosystems developed a service center automation system based on YandexGPT. The model determines the topic of a support request and generates answers to frequently asked questions by leveraging the appropriate knowledge base.

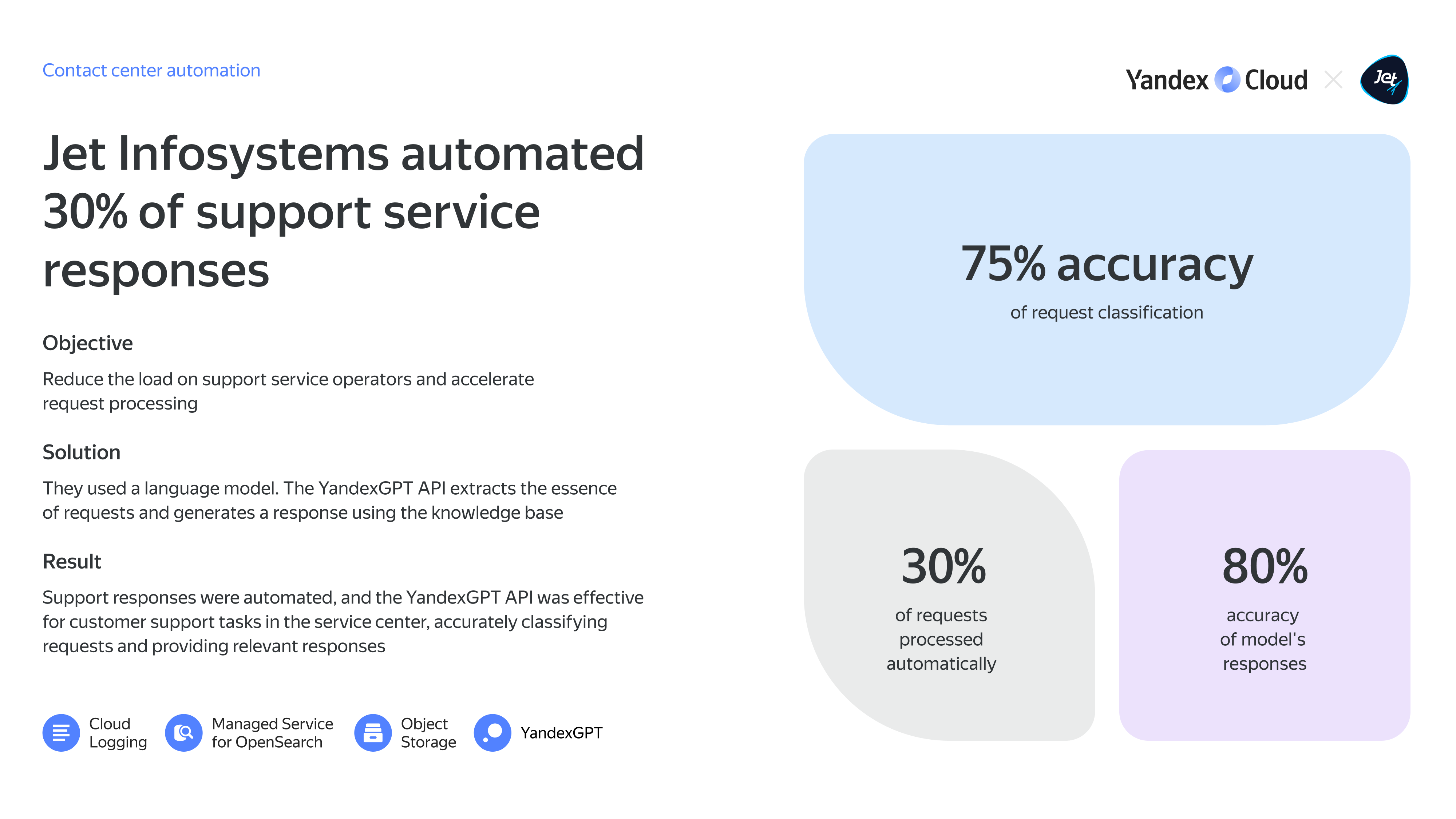

As a result, the company has automated the processing of 30% of requests, and 75% of responses do not require additional processing by support center employees.

Reduce the load on support service operators and accelerate request processing

The company’s service center works around the clock and responds to more than 21,000 requests each month. In their responses, employees rely on a knowledge base containing information about all cases over the last 30 years. More complex requests, such as advanced troubleshooting or issues regarding access to resources, are escalated to L2 support experts.

Due to employee workload, request length, and varying employee skill level, request processing sometimes takes a while, causing response delays. In light of that, Jet Infosystems decided to speed up request processing and reduce the routine burden on employees. To do that, the management set their eyes on LLMs to back request processing automation. The system they wanted to implement should be able to categorize requests, find information in knowledge bases and previous requests, collect all user requests in one log, and provide an answer. If it failed to find anything, the request should be escalated to experts.

To create a proprietary LLM-enabled system, Jet Infosystems opted for a cloud infrastructure. This made it possible to optimize the financial and time costs of deploying and maintaining the product, building a CI/CD‑pipeline. To always have the latest model version at their disposal and speed up adopting the product, the management looked for a public LLM accessible through a cloud API.

The team chose Yandex Cloud because of its developed infrastructure and managed services, and YandexGPT for its flexible and convenient API, localization, and compliance with Russian regulatory requirements.

A language model for service center automation

The Jet Infosystems ML center team began planning the project. Initially, they articulated and analyzed the milestones for automating the operations of maintenance shop employees. They then collected historical data and designed a prototype, which was managed by an architect.

At that stage, they tested various approaches: models with and without fine-training, fundamental models, and light‑models, classification models, etc. Specialists generated hypotheses and tested them. They then included the most efficient options in the MVP. Following that, the employees at the maintenance shop checked how the solution handled hands-on tasks to make corrections.

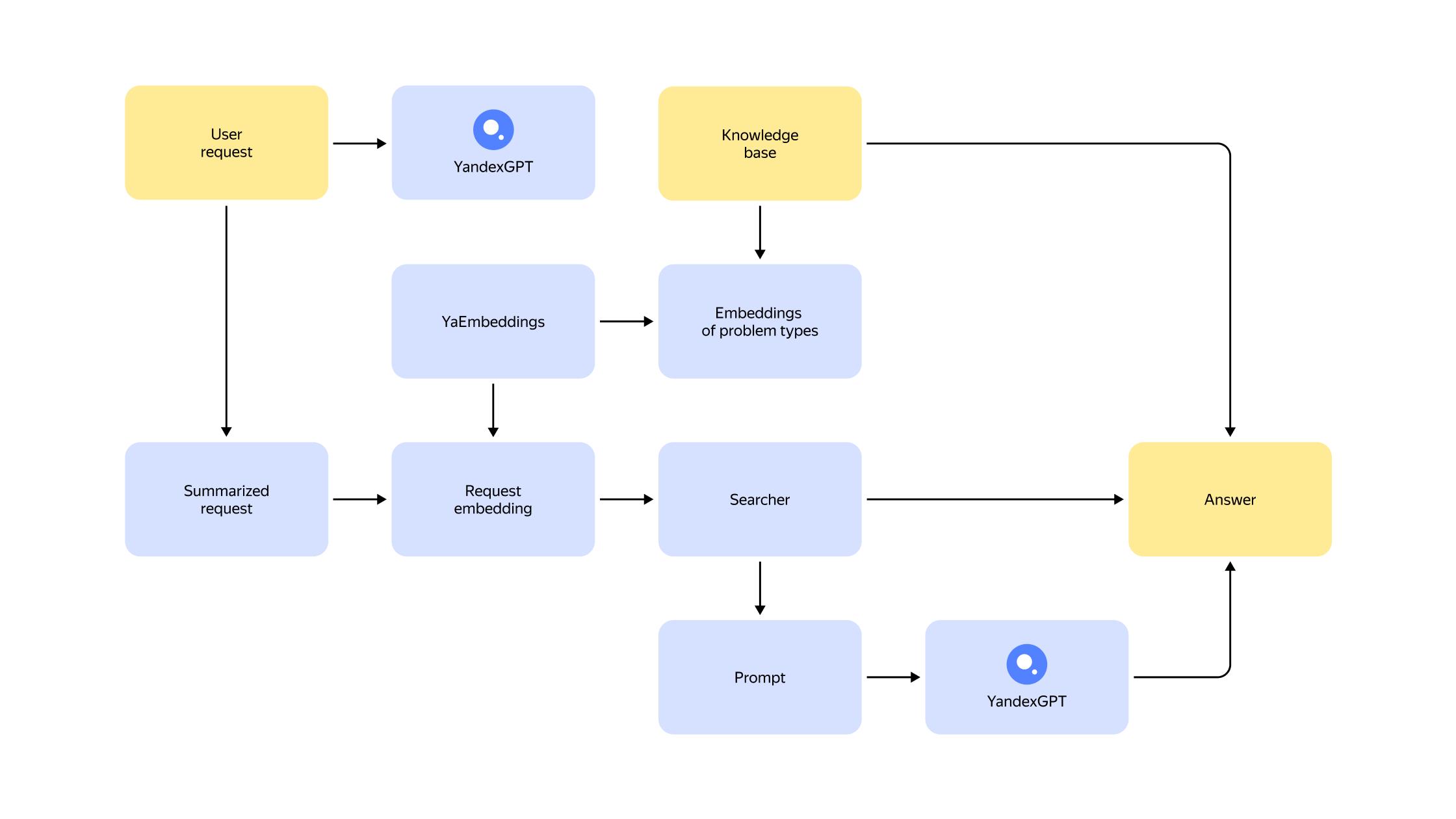

The ML team analyzed 13,000 requests sent over 30 days and identified around 100 most common request types. When processing the requests, they came across an issue, since some tickets lacked a detailed description and only had screenshots or a brief resolution. To avoid manual data collection for the dataset, the ML team developed an application based on the Langchain library to vectorize and index user text requests.

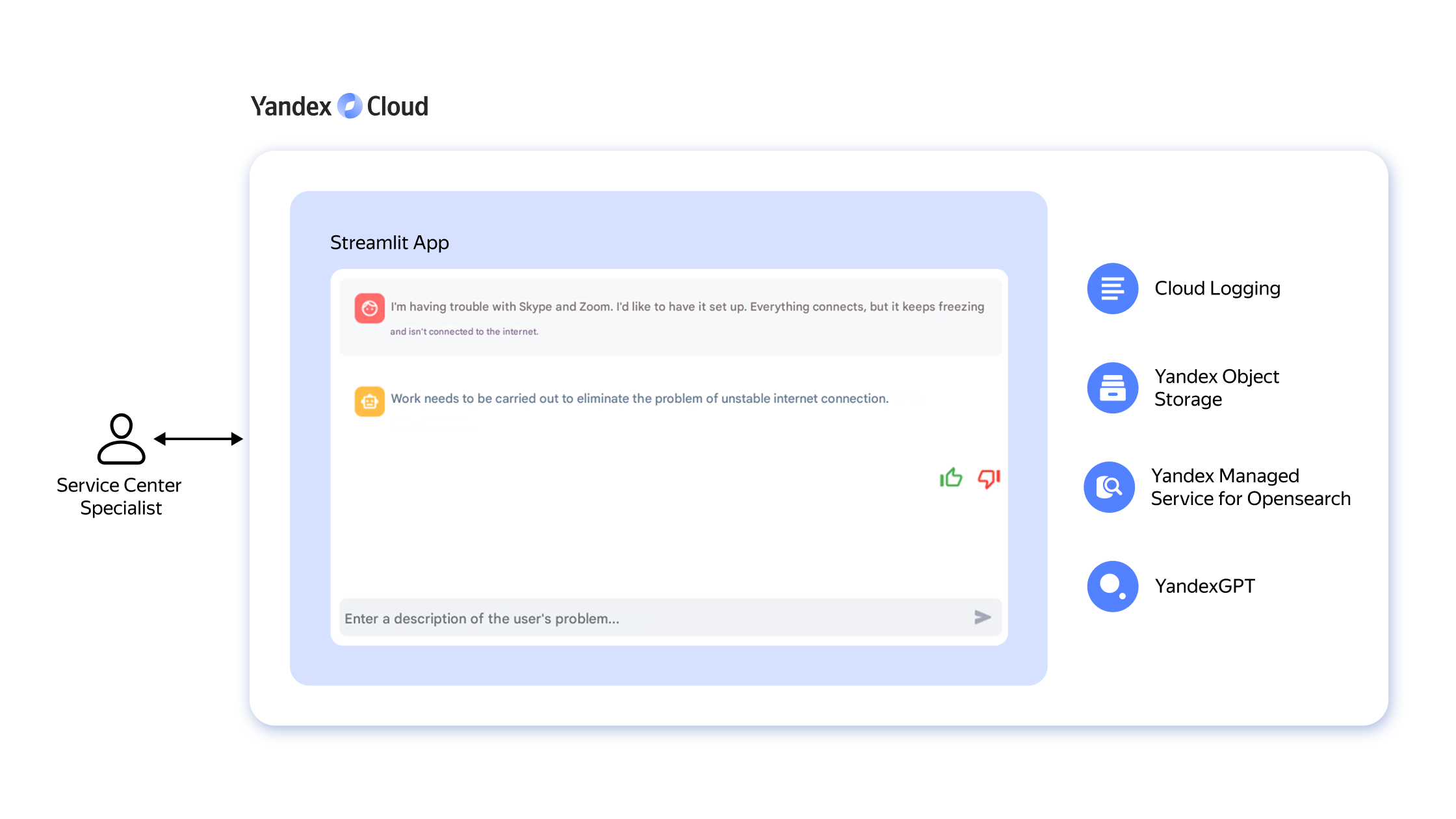

The next stage involved a knowledge base with answers to frequently asked questions and a history of answers to specific user requests. They hosted it in Yandex Object Storage. The team uses Yandex Managed Service for OpenSearch to search for answers in the database.

In this article:

The tool summarizes the user’s request, extracts the essence using the query‑prompt, and then searches the knowledge base for relevant documents. After that, there are two possible scenarios:

- YandexGPT finds a case similar to the request and gives a response automatically,

- The model gathers information from the knowledge base and formulates a new solution. This response is then forwarded to operators for review and, if necessary, correction.

After collecting feedback from the support service staff, the model was finalized. First and foremost, this included filling the knowledge base with relevant documents. The experts then enhanced the request classifier to cover more scenarios. Now that the pilot period is over, the team continues to tune the model so that its responses are more accurate, complete and stylistically appropriate for the service center.

Results

The pilot project took a month and a half and showed the effectiveness of using YandexGPT to solve user support problems at the Jet Infosystems service center.

During the pilot project, up to 30% of the requests to the maintenance shop were processed automatically. Classification accuracy across 100 request types amounted to 75%, while the LLM gave correct answers in 80% of the time.

The pilot project was a success and is currently slated to enter into production. The company continues to improve the model and plans to implement it as an auxiliary tool for technical support engineers, significantly speeding up request processing.