Yandex Data Processing

Yandex Data Processing

A service for processing multi-terabyte data arrays using open source tools like Apache Spark™, Apache Hadoop®, Apache HBase®, Apache Hive™, Apache Zeppelin™, and other Apache® ecosystem services.

Easy-to-use

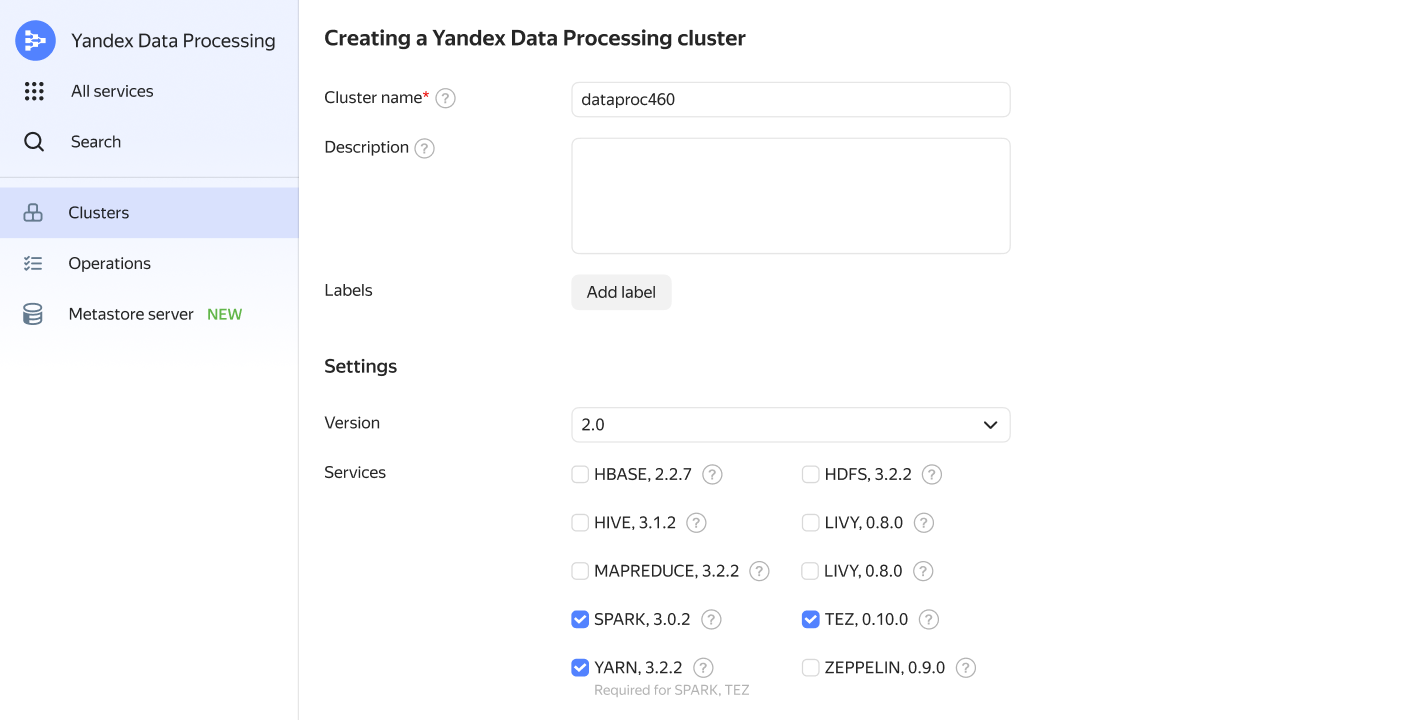

You select the size of the cluster, node capacity, and a set of services, and Yandex Data Processing automatically creates and configures Spark and Hadoop clusters and other components. Collaborate by using Zeppelin notebooks and other web apps via UI Proxy.

Low costs

Launch Yandex Data Processing for as little as 18 RUB/hour. Save up to 70% on VMs by choosing preemptible instances.

Full control of your cluster

You get full control of your cluster with root permissions for each VM. Install your own applications and libraries on running clusters without having to restart them.

AutoscalingPreview

Yandex Data Processing uses Instance Groups to automatically increase or decrease computing resources of compute subclusters based on CPU usage indicators.

Managing table metadata

Yandex Data Processing allows you to create managed Hive Metastore clusters, which can reduce the probability of failures and losses caused by metadata unavailability.

Task automation

Save time on building ETL pipelines and pipelines for training and developing models, as well as describing other iterative tasks. The Yandex Data Proc operator is already built into Apache Airflow.

We’ll take care of most cluster maintenance

Independent control

Control on the Yandex Cloud side

Getting started

Select the necessary computing capacity and Apache® services and create a ready-to-use Yandex Data Processing cluster.

FAQ

Spark™, HDFS, YARN, Hive, HBase®, Oozie™, Sqoop™, Flume™, Tez®, and Zeppelin™.

Get started with Yandex Data Processing

Useful links

Apache, Apache Hadoop, Apache Spark, and Apache Oozie are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries.