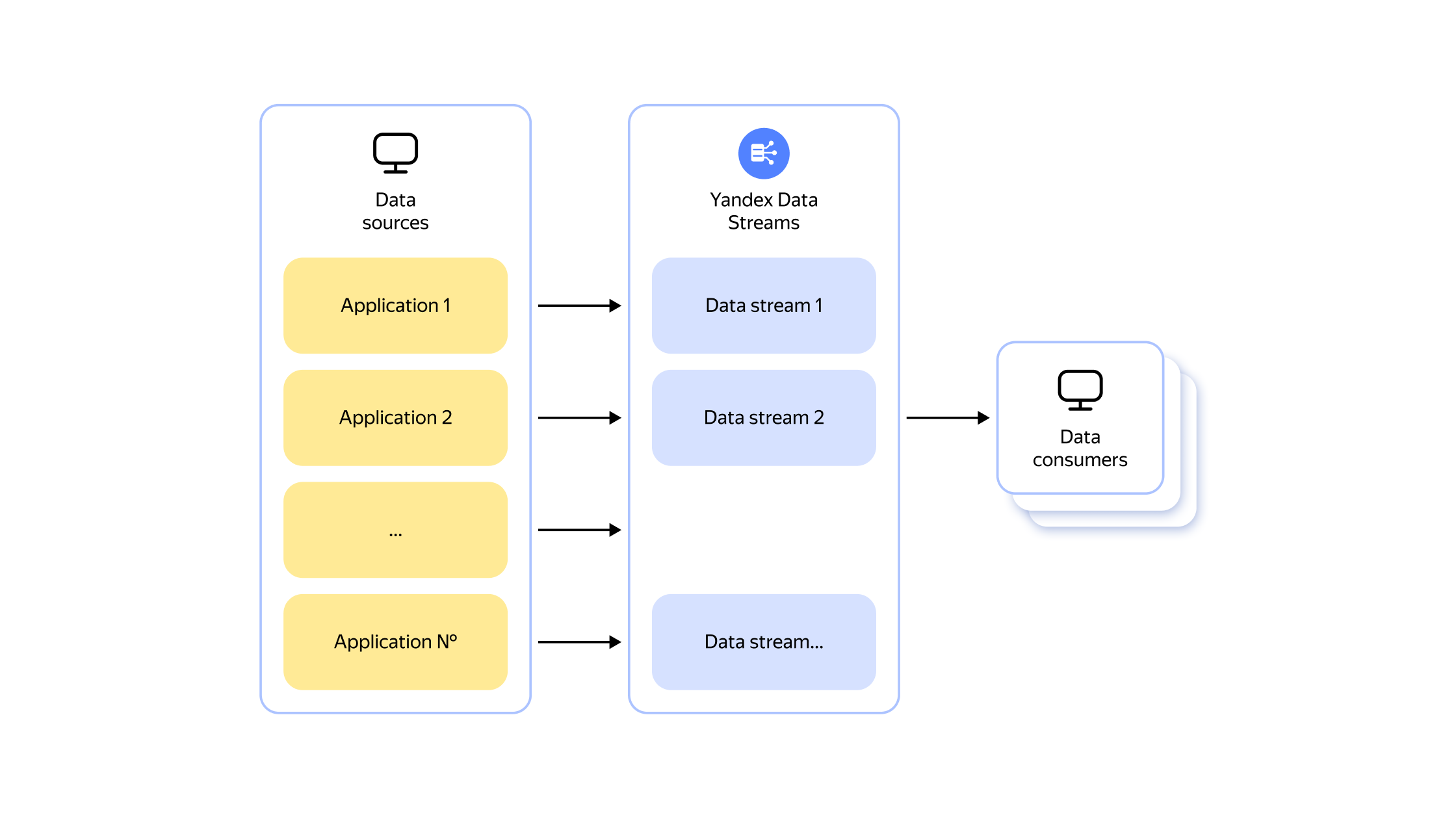

Stream processing with Data Streams

Yandex Data Streams can continuously collecting data from sources like website browsing histories, application and system logs, and social media feeds.

Yandex Data Streams

A scalable service for managing data flows in real-time based on YDB Topics.

It simplifies data exchange between components in microservice architectures. When used as transport for microservices, it simplifies integration, increases reliability, and improves scaling.

Compatible with Apache Kafka® and AWS Kinesis Data Streams protocols.

Write and read data practically in real time. You can set the data transfer rate and storage time.

Fine-tune resources to process data streams (topics) with different bandwidths: from 100 KB/s to 100 MB/s and higher. Automatic scaling of data streams (topics) is also available.

Work with YDB tables and data streams (topics) within a single transaction in the Managed Service for YDB.

Data is automatically replicated across multiple georgraphically distributed availability zones.

After creating data flows (topics), you can centrally manage them in the console or via the API.

With Yandex Data Transfer, a single data stream (topic) can be transferred to several targets with different storage policies.

Yandex Data Streams can continuously collecting data from sources like website browsing histories, application and system logs, and social media feeds.

Data Streams ingests data from sources, which Data Transfer then reads and splits into columns and rows, and stores them in one or more target systems, such as ClickHouse, other databases, Object Storage, etc. The transmitted data can then be processed in Cloud Functions to mask sensitive information, change the format, or carry out other types of processing.

Yandex Data Streams is capable of continuously collecting data from sources such as website browsing histories, application logs, social media feeds, and system logs.

Logstash is a trademark of Elasticsearch BV in the United States and/or other countries.

Apache® and Apache Kafka® are registered trademarks or trademarks of Apache Software Foundation in the United States and/or other countries.

ClickHouse is a registered trademark of ClickHouse, Inc.