Analyzing data with Jupyter

Yandex Query supports integration with Jupytercell (%%yq) and line (%yq) Python magic commands. The integration allows you to streamline data collection and analysis for a more efficient and straightforward workflow.

To analyze Query data with Jupyter:

- Install and configure the

yandex_query_magicpackage. - Try creating query templates.

- Process the execution results.

Getting started

-

Sign up for Yandex Cloud and create a billing account:

- Navigate to the management console

- On the Yandex Cloud Billing

ACTIVEorTRIAL_ACTIVEstatus. If you do not have a billing account, create one and link a cloud to it.

If you have an active billing account, you can navigate to the cloud page

Learn more about clouds and folders here.

- Navigate to the management console

-

Get access

Installing and configuring the yandex_query_magic package

Install the yandex_query_magic

%pip install yandex_query_magic --upgrade

-

Install the yandex_query_magic

pip install yandex_query_magic --upgrade -

Enable the Jupyter extension for the Jupyter Notebook UI controls:

%jupyter contrib nbextension install --userIf you get the

"No module named 'notebook.base'"error, try upgrading to Jupyter Notebook 6.4.12:pip install --upgrade notebook==6.4.12

Configuring the package

To configure the yandex_query_magic package, you can use the yq_settings line command with the following arguments specified:

%yq_settings --folder-id <folder_ID> ...

Available parameters:

--folder-id <folder_id>: ID of the folder used to run Query queries. The folder hosting a VM instance with Jupyter is used by default.--vm-auth: Authentication with the VM account key. For more information, see Using Yandex Cloud from within a VM.--env-auth <environment_variable>: Authentication with the authorized key kept in the environment variable. Use this mode when you cannot access the file system of the computer running Jupyter. For example, in Yandex DataSphere. In which case create a DataSphere secret and specify its name in the--env-authparameter.--sa-file-auth <authorized_key>: Authentication with authorized keys. For more information, see Creating an authorized key.

Testing the package

You can use the %yq line magic command with a single-line SQL query. In this case, the %yq keyword is used to execute the query.

If Jupyter is running on a VM with an attached service account, upload the extension to Jupyter:

%load_ext yandex_query_magic

%yq SELECT "Hello, world!"

Where:

%yq: Jupyter magic name.SELECT "Hello, world!": Text of query to Query.

If the VM does not have any attached service accounts:

-

Create a service account and assign the

yq.viewerrole to it. -

Create an authorized key for the service account.

-

Run the following commands specifying the path to the authorized key file:

%load_ext yandex_query_magic %yq_settings --sa-file-auth '<path_to_key_file>' %yq SELECT "Hello, world!"Here is an example:

%load_ext yandex_query_magic %yq_settings --sa-file-auth '/home/test/authorized_key.json' %yq SELECT "Hello, world!"The path to the

authorized_key.jsonfile is specified relative to the directory the current Jupyter Notebook file is saved in.

To send multi-line SQL queries, you need to use %%yq cell magic. The query text must begin with the %%yq keyword:

%%yq --folder-id <folder_ID> --name "My query" --description "Test query" --raw-results

SELECT

col1,

COUNT(*)

FROM table

GROUP BY col1

Where:

--folder-id: ID of the folder used to run Query queries. The default folder is the one specified earlier through%yq_settings. If not specified, it defaults to the folder in which the VM is running.--name: Query name.--description: Query description.--raw-results: Returns the unprocessed results of a query run in Query. For the format specification, refer to Correspondence of YQL and JSON types.

Creating query templates using the mustache syntax

You can use the templates of the computations exchanged between Jupyter and Query to work with queries or perform standard operations without writing code. For this purpose, Query has built-in support for the mustache syntax{{}} key symbols. You can use the mustache syntax with Jinja2

The Query built-in mustache templates allow you to insert variables from the Jupyter runtime environment directly into SQL queries. Such variables will also be automatically converted into the required Query data structures. For example:

myQuery = "select * from Departments"

%yq {{myQuery}}

The {{myQuery}} mustache string will be interpreted as the name of the source variable for the text, and select * from Departments will be sent to Query for execution.

Using mustache templates streamlines the integration between Jupyter and Query. Let's assume you have the lst=["Academy", "Physics"] Python list containing the names of departments whose data you want to process. Without the mustache syntax support in Query, first, you would need to convert the Python list into a string and then input it into the SQL query. Query example:

var lstStr = ",".join(lst)

sqlQuery = f'select "Academy" in ListCreate({lstStr});

%yq {{sqlQuery}}

I.e., working with complex data types requires a detailed knowledge of the Query SQL syntax. With the mustache syntax, you can write a much simpler query:

%yq select "Academy" in {{lst}}

Here, lst will be identified as a Python list and automatically get the correct SQL structure for list processing. This is the final query that will be sent to Query:

%yq select "Academy" in ListCreate("Academy", "Physics") as lst

Jinja2

To perform standard tasks in Jupyter and Query, we recommend using the built-in mustache syntax. For more advanced templating, you can use Jinja2.

To install Jinja2, run this command:

%pip install Jinja2

Example of using a Jinja template with the for cycle:

{% for user in users %}

command = "select * from users where name='{{ user }}'"

{% endfor %}

You can also use Jinja templates to perform various data processing operations. This example illustrates operations performed on department names based on whether the student belongs to the department:

{% if student.department == "Academy" %}

{{ student.department|upper }}

{% elif upper(student.department) != "MATHS DEPARTMENT" %}

{{ student.department|capitalize }}

{% endif %}

To make sure Jinja conversions comply with the Query rules, use the special to_yq filter. Here is what the lst=["Academy", "Physics"] Python list from the above example looks like in a Jinja template:

%%yq --jinja2

select "Academy" in {{lst|to_yq}}

If you need to disable templating, use the --no-var-expansion argument:

%%yq --no-var-expansion

...

Built-in mustache templates

In Yandex Query, the built-in mustache templates are enabled by default and help to streamline basic operations with Jupyter variables:

lst=["Academy", "Physics"]

%yq select "Academy" in {{lst}}

Using Pandas DataFrame variables

Here is an example of using yandex_query_magic and the mustache syntax with Pandas DataFrame

-

Declare the variable in Jupyter:

df = pandas.DataFrame({'_float': [1.0], '_int': [1], '_datetime': [pd.Timestamp('20180310')], '_string': ['foo']})

You can use df as a variable in queries to Yandex Query. During query execution, the df value is used to create a temporary table also named df. The table can be used within the Yandex Query query that is currently running.

-

Get the data:

%%yq SELECT * FROM mytable INNER JOIN {{df}} ON mytable.id=df._int

Table of Pandas types mapping to Query types:

| Pandas type | YQL type | Note |

|---|---|---|

| int64 | Int64 | Exceeding the int64 limit will result in a query execution error. |

| float64 | Double | |

| datetime64[ns] | Timestamp | Precision to the microsecond. Specifying nanoseconds (in the nanosecond field |

| str | String |

Using Python dict variables

Here is an example of using yandex_query_magic and the mustache syntax with a Python dict:

-

Declare the variable in Jupyter:

dct = {"a": "1", "b": "2", "c": "test", "d": "4"}Now you can use the

dctvariable directly in Query queries. When you execute a query,dctwill be converted into the relevant YQL DictKey Value a "1" b "2" c "test" d "4" -

Get the data:

%%yq SELECT "a" in {{dct}}

Table of Python dict types mapping to Query types:

| Python type | YQL type | Note |

|---|---|---|

| int | Int64 | Exceeding the int64 limit will result in a query execution error. |

| float | Double | |

| datetime | Timestamp | |

| str | String |

You can also convert a dictionary into a Pandas DataFrame table using a constructor:

df = pandas.DataFrame(dct)

Using Python list variables

Here is an example of using yandex_query_magic and the mustache syntax with a Python list:

-

Declare the variable in Jupyter:

lst = [1,2,3]Now you can use the

lstvariable directly in Query queries. When you execute a query,lstwill be converted into the relevant YQL List -

Get the data:

%%yq SELECT 1 IN {{lst}}

Table of Python list types mapping to Query types:

| Python type | YQL type | Note |

|---|---|---|

| int | Int64 | Exceeding the int64 limit will result in a query execution error. |

| float | Double | |

| datetime | Timestamp | |

| str | String |

You can also convert a list into a Pandas DataFrame table using a constructor:

df = pandas.DataFrame(lst,

columns =['column1', 'column2', 'column3'])

Jinja templates

Jinja templates provide a convenient way to generate SQL queries. They allow you to automatically insert various data, e.g, search conditions, so that you do not need to write each query manually. This streamlines work, prevents errors, and results in more readable code.

With Jinja templates, you can also automate generating queries that contain repeated elements. For example, you can use loops in your template to add a list of values to check in a query. This provides you with additional flexibility and enables you to write complex queries faster when you need to handle large amounts of data.

The steps below explain how to filter data in Yandex Query using a Python variable.

-

Declare the variable in Jupyter:

name = "John" -

When running the following code in the Jupyter cell, make sure to specify the

jinja2flag before executing an SQL query for it to be interpreted as a Jinja2 template%%yq <other_parameters> --jinja2 SELECT "{{name}}"Parameters:

to_yq filter

Jinja2 is a general-purpose templating engine. When processing variable values, it uses a standard string representation of data types.

For example, you have a Python list specified as lst=["Academy", "Physics"]. This is how you can use it in a Jinja template:

%%yq --jinja2

select "Academy" in {{lst}}

This will get you the Unexpected token '[' error. Jinja converts the lst variable to an ["Academy", "Physics"] string according to Python rules but disregards the Yandex Query-specific features of SQL queries, which causes the error.

To specify that Jinja conversions must comply with the Yandex Query rules, use the to_yq filter. Then, the same query in the Jinja syntax will look like this:

%%yq --jinja2

select "Academy" in {{lst|to_yq}}

Theto_yq Jinja filter converts data to the Yandex Query syntax in exactly the same manner as built-in mustache templates.

Capture command results

To capture the result of a line magic command, you can use the assignment command:

varname = %yq <query>

To capture the result of a cell magic command, you can specify the variable name and the << operator at the beginning of the query text:

%%yq

varname << <query>

Then, you can use the result as a standard Jupyter variable.

For example, this is how you capture a command result to output variables using cell magic:

output = %yq SELECT 1 as column1

And this is how you can capture a command result to output2 using line magic:

%%yq

output2 << SELECT 'Two' as column2, 3 as column3

Then, you can use these variables as standard IPython variables. For example, you can print them:

output

By default, the%yq and %%yq commands output a Pandas DataFramePandas DataFrame conversion, you can use the --raw-results argument.

The output variable in the above example will have the following structure:

| column1 | |

|---|---|

| 0 | 1 |

The output2 variable will look like this:

| column2 | column3 | |

|---|---|---|

| 0 | Two | 3 |

If a query does not imply a result, e.g., insert into table select * from another_table, the None value will be returned. If a query returns multiple results, they will be displayed as a list of individual results.

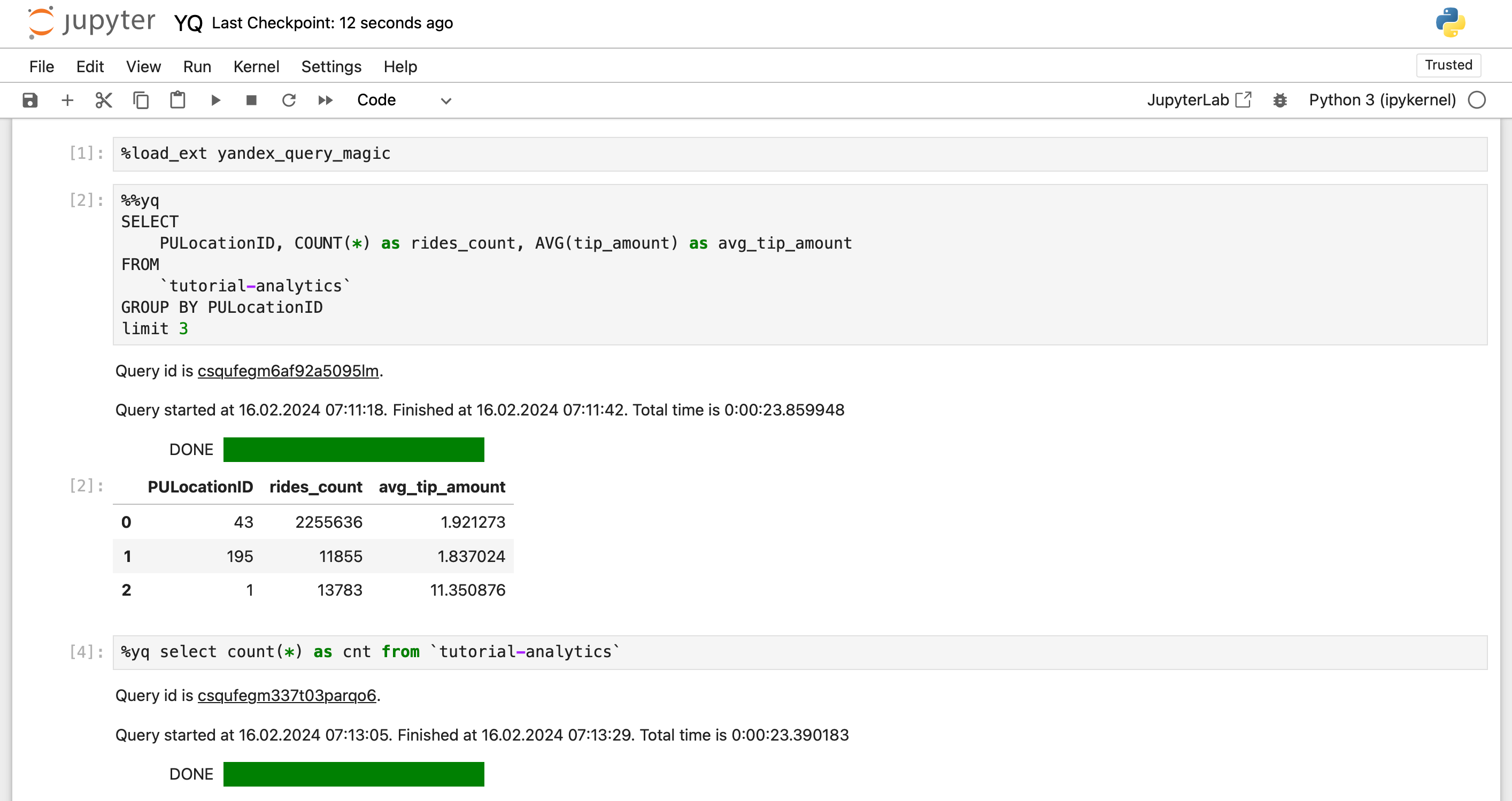

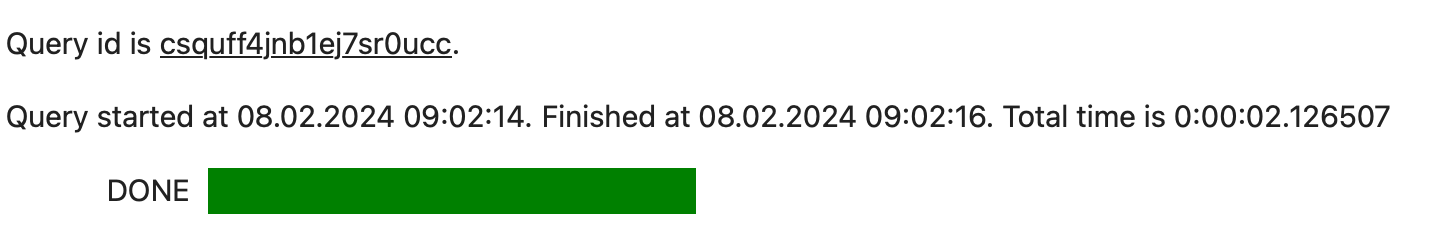

When executing a query, yandex_query_magic outputs additional data, e.g., query ID, start time, and execution duration:

To hide the execution progress information for a cell, you can use the additional %%capture command.

%%capture

%%yq

<query>

In this case, the execution progress will not be output to the console.