Source: Google Help

Real‑time data processing is a critical element for many organizations. It allows them to better serve clients and make critical business decisions instantaneously.

With data streaming on the rise, Apache Kafka® has seen many different use cases, across many different sectors as it’s designed to tackle large volumes of data in real time and capture real‑time event data, which organizations can use for analysis and log aggregation.

Apache Kafka, a product of the Apache software foundation, is an open‑source distributed platform designed to handle streaming data. It allows users to store data and broadcast events in real‑time, thus acting as both a message broker and a storage unit.

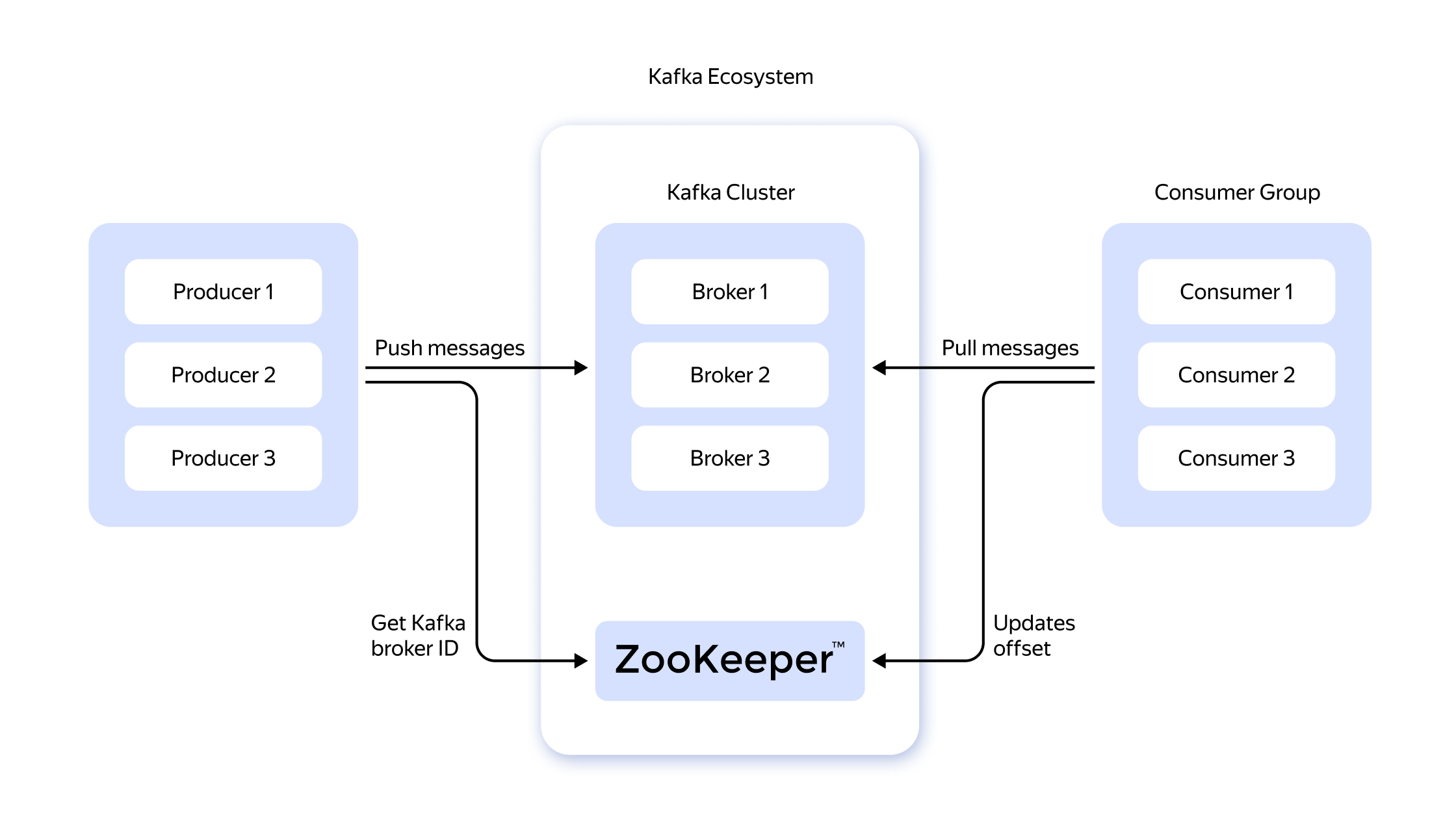

The entire Apache Kafka architecture is a publish‑subscribe messaging system divided into three categories.

A producer is anything that creates data. Producers constantly write events to Kafka. Examples of producers could include web servers, other discrete applications (or application components), IoT devices, monitoring agents, and so on. For instance:

The website component responsible for user registrations produces a “new user is registered” event.

A weather sensor (IoT device) produces hourly “weather” events with information about temperature, humidity, wind speed, etc.

A message queue holds the events created by a producer. The events are further classified into topics that group similar messages. For example, a topic could be user‑related activity containing all events generated by a user, such as a login or a page click.

Message queues are distributed across several brokers (servers), each handling a different consumer. One broker could deal with user registration events, while another would gather website activity for analytics. This makes them robust and fault‑tolerant.

A consumer (or subscriber) is a computer or application that generates an action in response to an event. It listens for relevant events in the queue based on their keys and identifies when one is found. These events may be used for different purposes, such as log aggregation or triggering another activity.

Kafka’s ability to create real‑time data pipelines and fault‑tolerant storage systems makes it ideal for supporting real‑world scenarios.

Modern users are getting accustomed to real‑time global updates. When you’re checking the scores and commentary of a football match on your website, you get those updates just as they happen. This quick and seamless data transfer is only possible due to streaming platforms like Kafka.

Companies use Kafka in various applications, some of which we, as consumers, use daily.

Websites with millions of users generate thousands of data points every second. That activity is logged whenever you click on a page or a link. Companies use Apache Kafka to record and store events like user registration, page clicks, page views, and item purchases. All these records are grouped into relevant topics and stored over a distributed network, and used for calculating real‑time analytics.

Some popular companies using Kafka include

LinkedIn: The LinkedIn tech stack uses Kafka for message exchange, activity tracking, and logging metrics. With over 100 Kafka clusters, they can process 7 trillion messages daily.

Uber: With one of the largest deployments of Apache Kafka in the world, uber uses the streaming platform for exchanging data between a user and driver.

Netflix: Netflix tracks activity for over 230 Million subscribers using the Kafka platform. It stores details like watch history, movie likes and dislikes, and what you watch to power its recommendation system.

Real‑time data processing refers to the capturing and storing of event data in real‑time. Conventional data pipelines run in scheduled batches and process all aggregated information during a specified time but Apache Kafka allows organizations to process data on the fly. Kafka captures, transforms, stores, and loads data into relevant applications in real‑time.

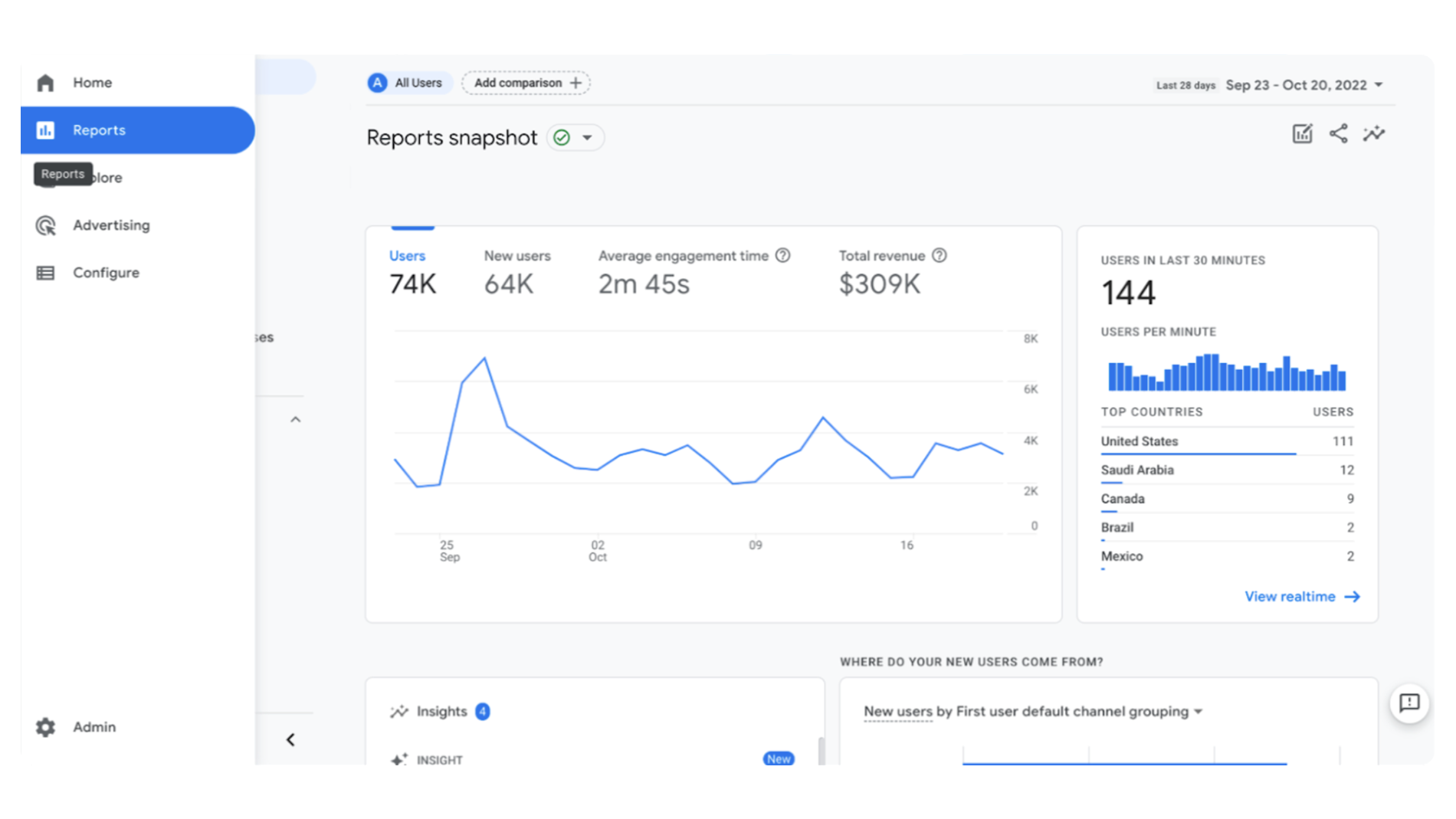

A prime example of real‑time data capture and processing is the Google Analytics

Source: Google Help

Real‑time data processing is a critical element for many organizations. It allows them to better serve clients and make critical business decisions instantaneously.

Kafka also doubles as a message broker that facilitates communication between different applications. It receives and stores event messages in a queue. The queue links the messages to consumer applications, similar to other message brokers like RabbitMQ. However, unlike RabbitMQ, Kafka segregates its messages into topics based on a message key, which consumers can use for filtering relevant messages.

Kafka collects operational metrics from different applications in a microservices architecture. These metrics generate key performance indicators (KPIs) for application monitoring.

Kafka can collect log files from multiple systems and place them in centralized storage. Applications can also be configured to stream logs directly via Kafka as messages.

These messages can then be stored in a file on disk. Moreover, the multiple log files can be transformed into a more straightforward form for cleaner interpretation.

Real‑time processing has opened up several new opportunities in different industries. Business leaders leverage Kafka for revenue generation, customer satisfaction, and business growth. Let’s discuss a few niches that are using Apache Kafka well.

The financial sector generates data in the count of millions daily. The sheer amount of financial transactions and the volume of customers is too much for conventional systems to handle. Apache Kafka handles all business‑critical and high‑volume workloads, ensuring customers get a seamless experience. Moreover, banks and other financial services use it for generating real‑time analytics and powering machine learning models for applications like fraud detection.

Some popular financial services using Kafka include

ING

Paypal

JPMorgan Chase

Forming aggregated analytics can be cumbersome when running marketing campaigns across multiple platforms. Kafka can build connections to multiple platforms like Google, Facebook, Twitter, or LinkedIn. It can gather marketing data as the user interactions are active and use this real‑time information to form analytics. The low latency system can help business leaders and marketing experts plan their future campaigns without delay.

Start‑ups or growing e‑commerce businesses face thousands of orders every hour and are challenging to handle. Swift response and efficient customer management are key to running an online shop. However, this becomes difficult when your tech infrastructure needs to keep up with the website traffic.

Kafka streamlines the communication between the customer and the shop owner and the robust pipelines ensure that all events, including orders, inquiries, and cancellations, reach the user within a minimum time. This allows the business owner to respond in near real‑time and maintain customer satisfaction. Kafka also helps gather real‑time analytics regarding business performance.

The telecommunications industry uses Kafka for various purposes. It’s used for real‑time data stream processing to detect anomalies and monitor network performance and it facilitates information integration from various data sources throughout the organization, such as call records, customer data, etc. However, the mainstream use case is supporting text messaging over a network and delivering it to your phone, tablet, or computer.

The healthcare industry benefits greatly from Kafka’s data streaming capabilities. It creates a seamless network of hospitals and clinics by building an uninterrupted communication and data transfer channel.

This universal network allows users to construct healthcare‑related analytics using data from various sources. It also assists knowledge sharing across institutes that impact research quality and reduces the time for medical breakthroughs.

A typical IoT infrastructure includes several electronic devices, a backend engine for processing and storage, and a network web for communication. Each device in this infrastructure is in constant communication with the other, sharing data that is vital for operation.

Imagine an agriculture field with several sensors spread across it. Some measure temperature, some humidity, while others keep track of the constituents of the soil. Each of these transmits this data back to a back‑end server every second. The back‑end server might generate analytics or use this data for machine‑learning forecasts.

Kafka supports this back‑and‑forth communication between the devices by building a persistent channel. It gathers data from all the various sources and transports it to a centralized database. Kafkas message queue ensures the messages remain in the order sent for sequential processing.

The gaming industry has experienced exponential growth in recent years, generating $300 Billion

Apache Kafka allows fast communication between different servers and users, offering players a low‑latency experience during gaming. The real‑time event streaming capabilities benefit analytics and machine learning applications like cheater detection.

The streaming pipelines ensure any events, such as player position changes, are instantaneously transmitted to the entire player base. Kafka scalability also allows for accommodating a growing number of users which is crucial considering the growth of the gaming industry.

Despite its numerous benefits, Kafka isn’t a one‑size‑fits‑all solution. There are many scenarios where Kafka’s capabilities might be overkill, and the configuration efforts might just be a needless overhead. Below are some cases where Kafka might not be needed, however if you’re not sure, our Solution Architects will always be happy to discuss your individual requirements.

Kafka’s charms might fool some people into believing it is the ultimate data processing solution; however, Kafka is best for companies facing millions of requests and messages per day. For anything less, it is better to revert to other broker services like RabbitMQ.

We’ve discussed Kafka’s quick message transmission system but it has its limitations for hard real‑time situations. While its scalable system is great for gaming experiences, where occasional latency spikes are not a deal‑breaker, Kafka is not advised in mission‑critical scenarios requiring a strictly zero latency system.

Integrating Kafka with a large‑scale legacy system can be quite a hassle. The setup requires several Kafka experts, and building the end‑to‑end architecture can take up to months. It is better to operate with conventional methods and data pipelines or look for a managed Kafka solution.

Apache Kafka is a fantastic tool that allows event producers and consumers to communicate seamlessly via a message queue. It offers several benefits to modern‑day systems, such as

Kafka offers a fault‑tolerant and scalable system that can accommodate several thousand users and process millions of messages daily. However, it is not necessarily the right fit for every situation.

Kafka should be avoided when

In this article, we’ll talk about: